Introduction: Why Your MCP Server Probably Doesn't Work in Production

Last month I watched a team deploy an MCP server to production. It had three tools, worked great in testing, and broke spectacularly under load.

The failures weren't due to code bugs. The code was fine. The failures were architectural. No permission checking. No state persistence. No audit logging. When the server restarted, it forgot everything. When multiple agents accessed it simultaneously, they stepped on each other's state.

It was a prototype. They treated it like production.

This is the story of how to tell the difference.

The Problem: Prototype vs Production

Here's the trap most teams fall into:

Prototypes are fast. Throw a few functions in a TypeScript file, define them as MCP tools, wire up a simple HTTP server. Boom. You have something that works.

Production systems are slow. They require architecture decisions. Authentication. Authorisation. State management. Observability. Monitoring. Retries. Error handling. Rate limiting.

The jump from prototype to production isn't a code change. It's a systems engineering change.

And most teams skip it entirely.

They deploy their prototype to production, it works for three weeks, and then it fails in subtle, cascading ways. Users complain. The team scrambles to patch it. Six months later, they're running a dumpster fire called "production" that's actually just a prototype that broke.

I'm going to show you how to skip that journey.

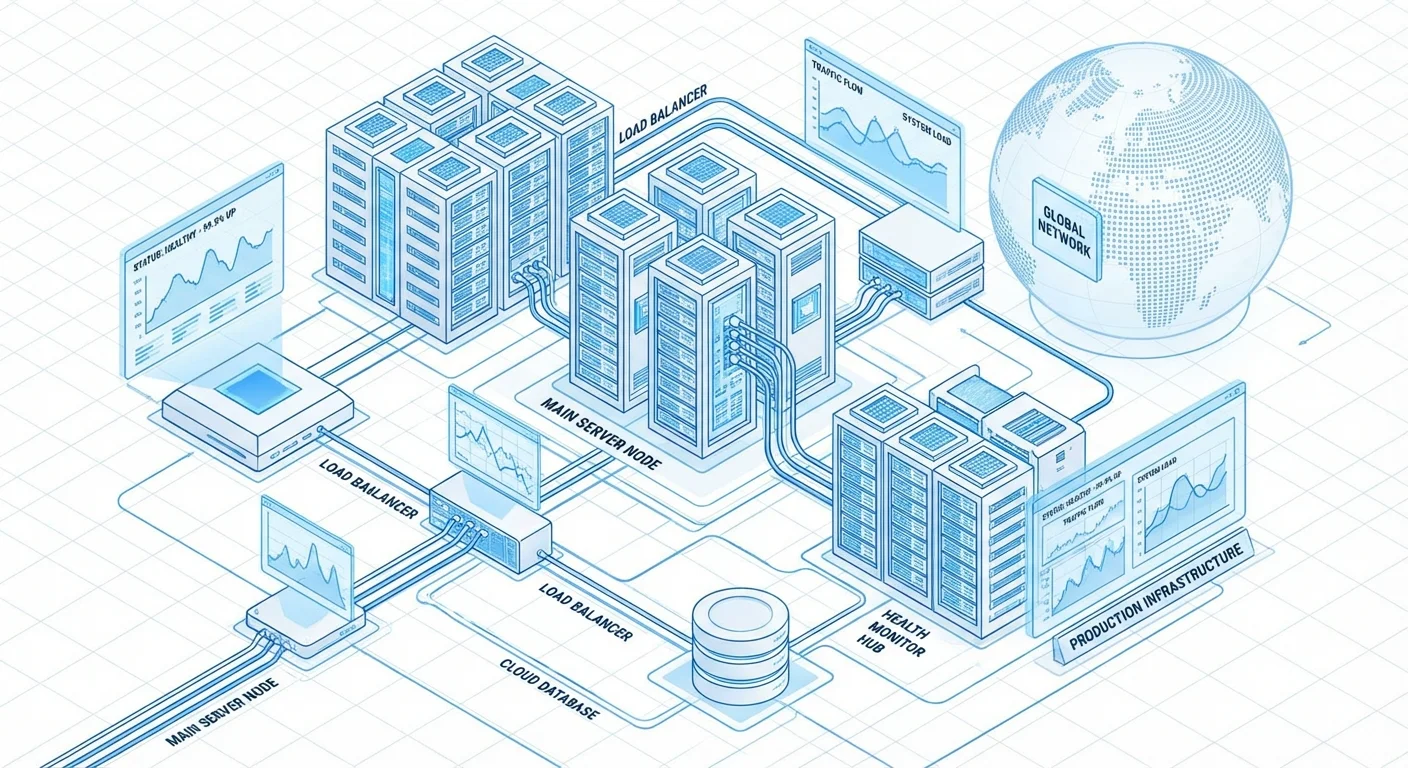

The Production MCP Server Architecture

Think of a production MCP server like this:

┌──────────────────────────────────────────────────────┐

│ Agent / LLM Interface │

└──────────────────────────────────────────────────────┘

↓

┌──────────────────────────────────────────────────────┐

│ Authentication / OAuth 2.1 Layer │

│ - Token validation │

│ - Scope verification │

│ - Permission enforcement │

└──────────────────────────────────────────────────────┘

↓

┌──────────────────────────────────────────────────────┐

│ Tool Registry & Discovery │

│ - Dynamic tool loading │

│ - Permission-based filtering │

│ - Tool versioning │

└──────────────────────────────────────────────────────┘

↓

┌──────────────────────────────────────────────────────┐

│ State Management Layer │

│ - Database persistence │

│ - Transaction handling │

│ - Concurrent access control │

└──────────────────────────────────────────────────────┘

↓

┌──────────────────────────────────────────────────────┐

│ Observability & Logging │

│ - Structured logging │

│ - Metrics collection │

│ - Audit trails │

└──────────────────────────────────────────────────────┘

↓

┌──────────────────────────────────────────────────────┐

│ Business Logic (Your Tools) │

└──────────────────────────────────────────────────────┘

This isn't optional. If you're missing any of these layers, you don't have a production system. You have a prototype running in production.

Layer 1: OAuth 2.1 Authentication

Most MCP servers skip this entirely. They use an API key, maybe hardcoded in environment variables, and call it a day.

This doesn't work. Here's why:

- API keys have no expiration

- API keys can't be revoked without restarting the server

- API keys provide zero permission granularity

- API keys leave no audit trail

OAuth 2.1 solves all of these problems.

Minimum Implementation:

// auth.ts

import { verifyToken, getScopes } from 'oauth-library'

interface AuthContext {

agentId: string

scopes: string[]

tokenExpiresAt: number

}

export async function validateRequest(

authHeader: string

): Promise<AuthContext> {

const token = authHeader.replace('Bearer ', '')

// Verify token is valid and not expired

const payload = await verifyToken(token)

if (!payload) {

throw new Error('Invalid token')

}

// Extract scopes

const scopes = getScopes(token)

return {

agentId: payload.sub,

scopes,

tokenExpiresAt: payload.exp * 1000,

}

}

// In your request handler

export async function handleToolCall(

toolName: string,

authHeader: string,

params: unknown

) {

const auth = await validateRequest(authHeader)

// Enforce scope-based permissions

const requiredScope = `tool:${toolName}`

if (!auth.scopes.includes(requiredScope)) {

throw new Error(`Insufficient permissions for ${toolName}`)

}

// Continue with tool execution

return executeTool(toolName, params, auth.agentId)

}

What You Get:

- Short-lived tokens (expires in minutes, not years)

- Revocation support (token database, not hardcoded)

- Fine-grained permissions (scope-based)

- Audit trail (who called what, when)

Implementation Cost: 1-2 days if you're using an existing OAuth provider (Auth0, Stytch, etc.). Much longer if you're building your own.

Recommendation: Use an existing provider. Building OAuth is a security nightmare.

Layer 2: Permission Management with Enforcement

OAuth gives you authentication (who are you?). You also need authorisation (what are you allowed to do?).

These are different concerns.

Minimum Implementation:

// permissions.ts

interface ToolPermission {

agentId: string

toolName: string

scope: string

grantedAt: Date

expiresAt?: Date

}

const db = new Database('permissions.db')

export async function canAccessTool(

agentId: string,

toolName: string

): Promise<boolean> {

const permission = await db.query(

`SELECT * FROM permissions

WHERE agentId = ? AND toolName = ?

AND (expiresAt IS NULL OR expiresAt > NOW())`,

[agentId, toolName]

)

return permission.length > 0

}

export async function grantPermission(

agentId: string,

toolName: string,

expiresAt?: Date

): Promise<void> {

await db.execute(

`INSERT INTO permissions (agentId, toolName, grantedAt, expiresAt)

VALUES (?, ?, NOW(), ?)`,

[agentId, toolName, expiresAt || null]

)

}

// In your tool execution

export async function executeToolSafely(

agentId: string,

toolName: string,

params: unknown

) {

// Check permissions

const hasAccess = await canAccessTool(agentId, toolName)

if (!hasAccess) {

throw new Error(`Agent ${agentId} not authorised for ${toolName}`)

}

// Log the access

await logAccess(agentId, toolName, params)

// Execute

return executeTool(toolName, params)

}

What You Get:

- Fine-grained access control (per agent, per tool)

- Time-limited permissions (expires at specific date)

- Audit log (who accessed what, when, from where)

- Enforcement at runtime (not just documentation)

Critical Point: Permissions must be checked every single time a tool is called. Not cached, not assumed, not "probably still valid." Every time.

Layer 3: State Management

Here's where most prototype MCP servers fail catastrophically.

You call a tool. It modifies state. The server crashes or restarts. The state is gone.

In a prototype, this might be fine. In production, it's a disaster.

Minimum Implementation:

// state.ts

import Database from 'better-sqlite3'

const db = new Database('state.db')

interface StateEntry {

key: string

value: string

agentId: string

createdAt: Date

updatedAt: Date

version: number

}

export async function getState(

agentId: string,

key: string

): Promise<string | null> {

const row = db.prepare(

'SELECT value FROM state WHERE agentId = ? AND key = ?'

).get(agentId, key)

return row?.value || null

}

export async function setState(

agentId: string,

key: string,

value: string

): Promise<void> {

const existing = db.prepare(

'SELECT version FROM state WHERE agentId = ? AND key = ?'

).get(agentId, key)

if (existing) {

// Update with optimistic locking

db.prepare(

`UPDATE state

SET value = ?, updatedAt = NOW(), version = version + 1

WHERE agentId = ? AND key = ? AND version = ?`

).run(value, agentId, key, existing.version)

} else {

// Insert new

db.prepare(

`INSERT INTO state (agentId, key, value, createdAt, updatedAt, version)

VALUES (?, ?, ?, NOW(), NOW(), 0)`

).run(agentId, key, value)

}

}

// Example: A tool that needs state

export async function incrementCounter(agentId: string) {

const current = await getState(agentId, 'counter')

const count = parseInt(current || '0', 10)

await setState(agentId, 'counter', String(count + 1))

return { count: count + 1 }

}

What You Get:

- Persistent state (survives server restarts)

- Concurrent access safety (optimistic locking)

- Per-agent isolation (agents don't see each other's state)

- Versioning (detect concurrent modifications)

Implementation Cost: Half a day if you're using SQLite. Longer if you need replication or clustering.

Critical Point: Use optimistic locking or transactions. Without this, concurrent access will corrupt your data.

Layer 4: Observability & Logging

Production systems fail in ways you can't anticipate. You need visibility.

Minimum Implementation:

// logging.ts

import pino from 'pino'

const logger = pino({

level: process.env.LOG_LEVEL || 'info',

transport: {

target: 'pino-pretty',

options: {

colorize: true,

},

},

})

interface ToolCallMetrics {

agentId: string

toolName: string

duration: number

status: 'success' | 'error'

errorMessage?: string

paramSize: number

resultSize: number

}

export async function logToolCall(

metrics: ToolCallMetrics

): Promise<void> {

logger[metrics.status === 'error' ? 'error' : 'info'](

{

type: 'tool_call',

agentId: metrics.agentId,

toolName: metrics.toolName,

duration: metrics.duration,

paramSize: metrics.paramSize,

resultSize: metrics.resultSize,

},

`Tool call: ${metrics.toolName}`

)

// Persist to metrics database for analysis

await metricsDb.insert({

timestamp: new Date(),

...metrics,

})

}

// In your tool handler

export async function executeToolWithMetrics(

agentId: string,

toolName: string,

params: unknown

) {

const startTime = Date.now()

try {

const result = await executeTool(toolName, params)

const duration = Date.now() - startTime

await logToolCall({

agentId,

toolName,

duration,

status: 'success',

paramSize: JSON.stringify(params).length,

resultSize: JSON.stringify(result).length,

})

return result

} catch (error) {

const duration = Date.now() - startTime

await logToolCall({

agentId,

toolName,

duration,

status: 'error',

errorMessage: error.message,

paramSize: JSON.stringify(params).length,

resultSize: 0,

})

throw error

}

}

What You Get:

- Structured logging (JSON, parseable, queryable)

- Performance metrics (duration, sizes)

- Error tracking (what failed, why, when)

- Audit trail (compliance, debugging)

Implementation Cost: Half a day.

Putting It Together: A Complete Example

Here's what a production tool call looks like:

export async function handleMCPRequest(request: Request) {

try {

// 1. Validate authentication

const auth = await validateRequest(request.headers.get('authorization'))

// 2. Parse request

const { toolName, params } = JSON.parse(await request.text())

// 3. Check permissions

const hasAccess = await canAccessTool(auth.agentId, toolName)

if (!hasAccess) {

return errorResponse(403, 'Insufficient permissions')

}

// 4. Get current state (if needed)

const currentState = await getState(auth.agentId, 'session_context')

// 5. Execute with metrics

const startTime = Date.now()

const result = await executeTool(toolName, params, {

agentId: auth.agentId,

state: currentState,

})

const duration = Date.now() - startTime

// 6. Update state (if needed)

if (result.newState) {

await setState(auth.agentId, 'session_context', result.newState)

}

// 7. Log everything

await logToolCall({

agentId: auth.agentId,

toolName,

duration,

status: 'success',

paramSize: JSON.stringify(params).length,

resultSize: JSON.stringify(result).length,

})

// 8. Return result

return successResponse(result)

} catch (error) {

await logToolCall({

agentId: auth?.agentId || 'unknown',

toolName: 'unknown',

duration: 0,

status: 'error',

errorMessage: error.message,

paramSize: 0,

resultSize: 0,

})

return errorResponse(500, error.message)

}

}

This isn't fancy. But it covers the basics. Authentication. Permissions. State. Observability.

What Gets Missed

Here's what teams usually forget:

Rate limiting. Agents should have limits on how many calls they can make. Without this, a broken agent can burn your costs.

Circuit breakers. If a tool starts failing consistently, stop calling it. Return an error instead. Prevent cascading failures.

Retry logic. Some failures are transient. Implement exponential backoff. But not for every error—only idempotent ones.

Versioning. Your tools will change. Old agents shouldn't break. Support multiple versions, or deprecate gracefully.

Monitoring. Set up alerts. If error rates spike, you want to know immediately. Not when your customer complains.

When NOT to Build an MCP Server

Before you start, ask yourself:

- Do I really need a server? Or could I just make direct API calls?

- Do I need persistence? Or is ephemeral state fine?

- Do I need fine-grained permissions? Or is one shared API key okay?

- How many agents will use this? (If it's just one, you probably don't need MCP)

If you're answering "no" to most of these, you don't need an MCP server. You need a simpler integration.

Conclusion: The Engineering Difference

The jump from prototype to production isn't a code change. It's a systems thinking change.

You have to think about:

- Who can access this?

- What if it fails?

- What if it's called a million times?

- What happens when we restart?

- How do we know what broke?

This is why 98% of MCP servers are worthless. They skip these questions.

But if you answer them? If you build authentication, permissions, state management, and observability into your server from day one?

You don't have a toy. You have infrastructure.